Projects I've Done

Sometimes I feel down that I haven't done much. Writing these out has helped.

-

Issues with Concept Bottleneck Models

"Concept learning" is one (limited) approach to trying to get some interpretability into neural network models. Perhaps the most prominent class of models is the "concept bottleneck model". In the XAI workshop for ICML 2021, we showed that this class of models is fundamentally flawed as a tool for model interpretability without modification. My main contribution was finding this leakage (though I didn't understand the implications of it) and running various experiments to prove the point and show the mechanism. This motivated a NeurIPS paper for other folks in the lab (and a couple other NeurIPS papers from other groups)! Plus we were (very minorly) cited by Anthropic in their paper on decomposing langauage models and by DeepMind in their paper on interpreting AlphaZero's chess skills acquisition.

I'm proud of this work, but it also kind of soured me on this kind of concept learning. It feels like an interpretability half-measure with no good story for getting to full interpretability. Though concept intervention is cool.

-

Identifying crumbling buildings in Baltimore from aerial photos

As part of the Data Science for Social Good fellowship, I worked with the city of Baltimore to detect crumbling residential row homes from aerial photos and tabular city data. I went into the fellowship with a desire to sharpen my problem scoping skills and to see what data science was like in a number of different non-profit contexts. We spent the majority of the time on scoping and understanding the problem. I taught the other two members of my team how neural networks and random forests work, and we used these to create a well performing model that was audited for various fairness metrics. We're working with the city to get it deployed on their infrastructure so they can use and maintain it themselves. The working version is private, but there's a public version of the code.

-

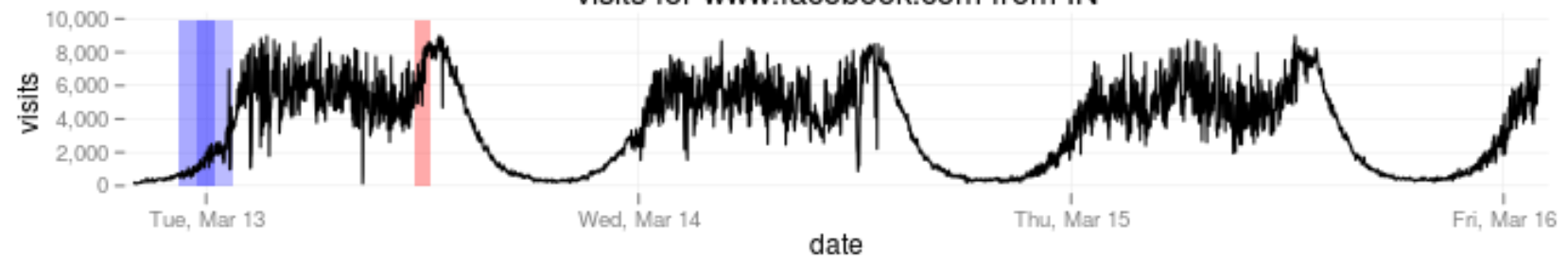

Wikipedia traffic analysis for censorship and outage detection

While working on a different project to monitor Internet censorship, I realized that inbound traffic statistics can be used to detect when a website may be inaccessible in a given area. After I wrote and demoed a proof of concept, we worked with the Wikimedia Foundation to deploy it on their internal data. It quickly detected censorship events and natural disasters in numerous countries, and the WMF policy team found it useful enough to have it rewritten and maintained by an internal WMF team.

-

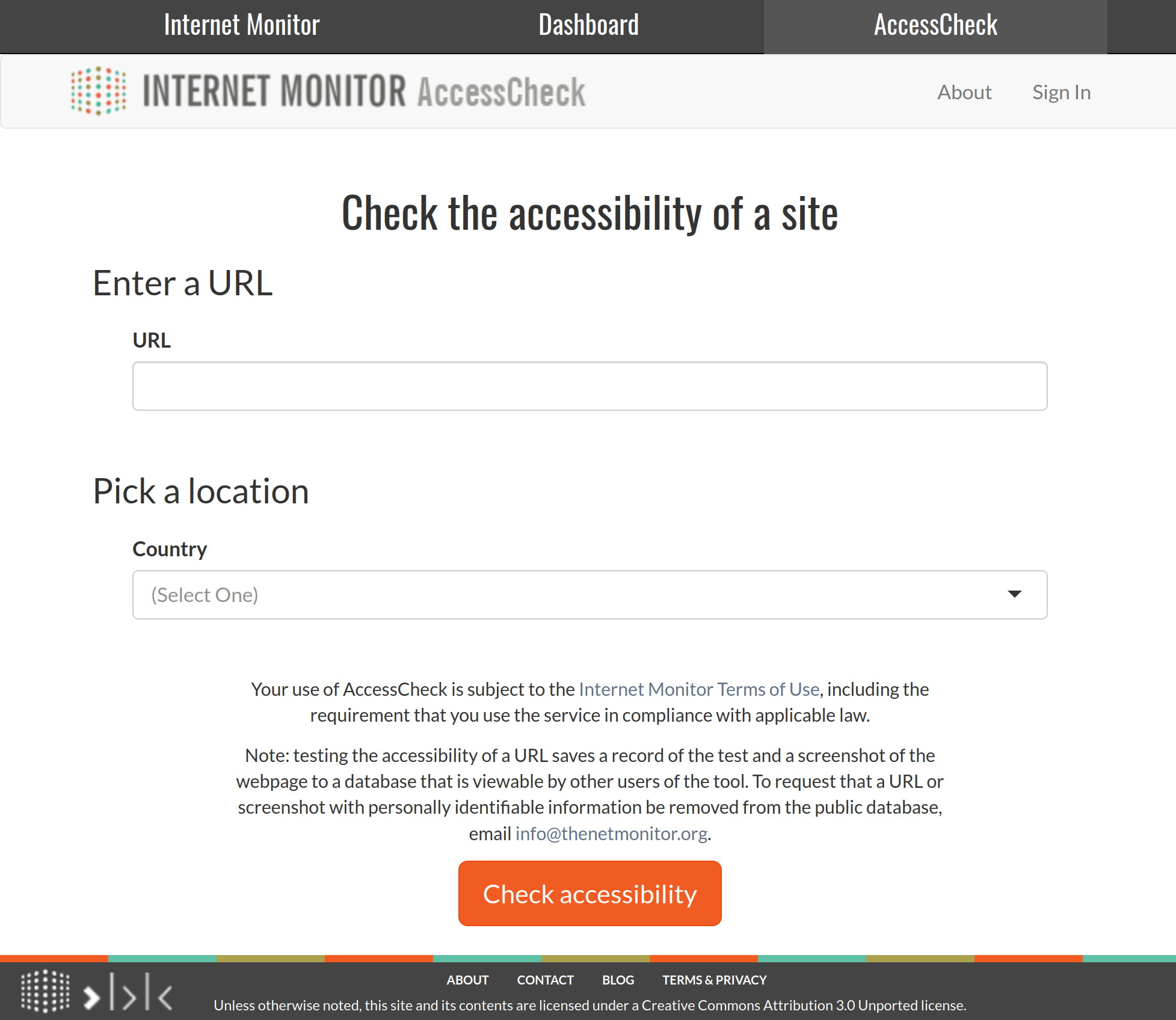

Internet Monitor AccessCheck – Real-time world-wide Internet censorship monitoring

This is the largest software project I ever wrote the majority of. As part of a large project from the State Department's Democracy, Human Rights and Labor group, we were tasked with answering the questions of "Which countries are censoring what?" and "How is this changing over time?" To answer these questions, we had to build two things: a synced database of all the results from various groups' Internet censorship research, and a tool for assessing the censorship status of various web services in real time when existing data didn't exist. I successfully built and deployed beta versions of these pieces before the project was ended for other reasons.

-

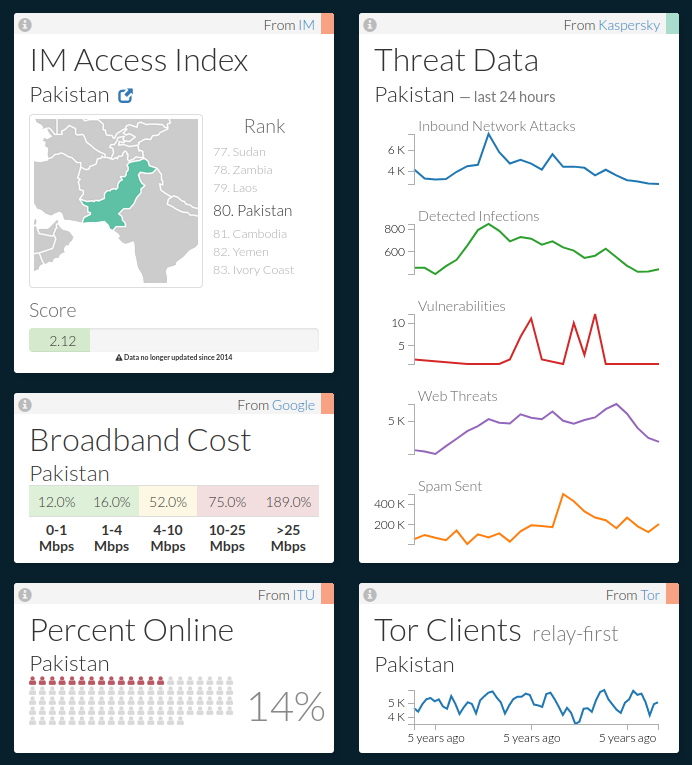

Internet Monitor Dashboard – Getting pretty, useful Internet data in front of policy folks

This was a fun one mostly because I got to do all the design (as well as most everything else). For folks that study the Internet, it was thought it would be nice to have a real time dashboard of Internet metrics. I helped write this dashboard from scratch. It consisted of real time data fetching from a bunch of sources, ETL stuff, and real time publishing out to the front end. It was engineered and documented so others could easily contribute new dashboard widgets, and we actually had an intern successfully make a bunch of widgets for us.

The project died, but I'm happy with the technical and visual designs I made.

-

Bias Bounty data science competition entry

Bias Bounty was a short-lived competition idea where people would compete for prizes by finding bias issues in machine learning algorithms. I was pretty excited when Twitter used the bug bounty idea to get feedback on issues with one of their algorithms, so I was pretty jazzed on this concept. The first (and only) competition launched through Bias Bounty ended up being a regular machine learning competition where the goal was to accurately predict perceived age, perceived gender, and skin tone from images of people. It felt fraught, but I competed and spent a few days on it and came in third (and got some money!).

There were some real issues with the execution of the concept, so I'm saddened by that because I was rooting for it, but the idea lives on in other things, like the DEFCON AI Village Red Teaming.